The Risks of AI-Generated Kid's Content on YouTube

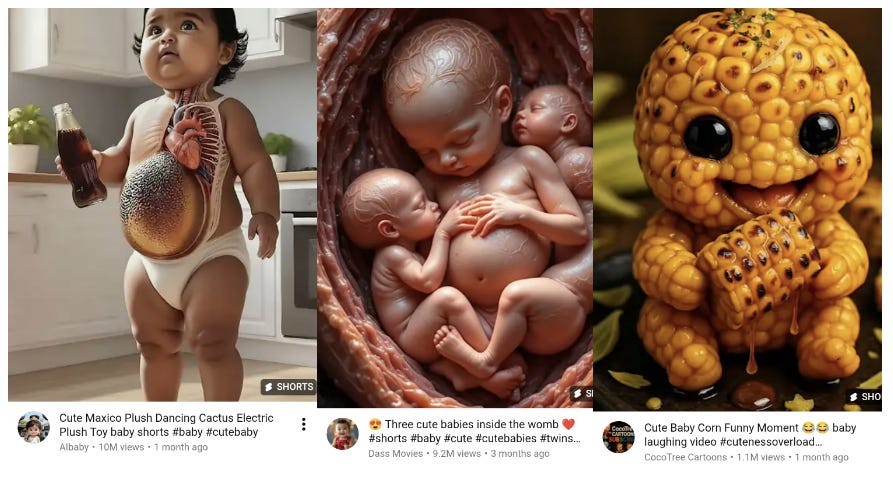

Some of the shocking and unpleasant video genres we found searching through children's YouTube content

Creating images, sounds and videos at the touch of a button has never been easier, and many people have realised that generative AI tools can help them make children’s material quickly and cheaply. As a result, many platforms have been deluged in AI content all vying for your child’s attention, these can range from the obvious and unpleasant to the more subtle use of artificial voices, AI text storylines and thumbnails. The question of whether generative AI kid’s content is inherently dangerous is very hard to tackle, but its clear that many of the uses to which it has been turned carry a lot of risks for children.

In this article we will be focusing on YouTube

To begin with we opened the YouTube home page with a clean browser and began searching in ‘guest mode’, meaning without a Google account.

We used three main search terms, and then allowed the videos to autoplay, or clicked through the top selection on the side bar, the searches were:

“cute cat”, “cute baby”

“fun kids shows”

“mario kids show”

These are simple, straightforward search terms, the kind any parent or older child might use. The ‘cute’ genre seemed to prompt more YouTube Shorts content, many of which were harmless enough. But interspersed with the normal were plenty of bizarre, obviously generative AI videos and images (Fig.1)

Many of these almost hallucinatory thumbnails and videos likely come from older generative models, such as DALL-E or Midjourney. With AI text-to-image software improving rapidly it might be that these nonsense/gibberish type outputs will stop being made, and existing content will remain on platforms like YouTube as artefacts.

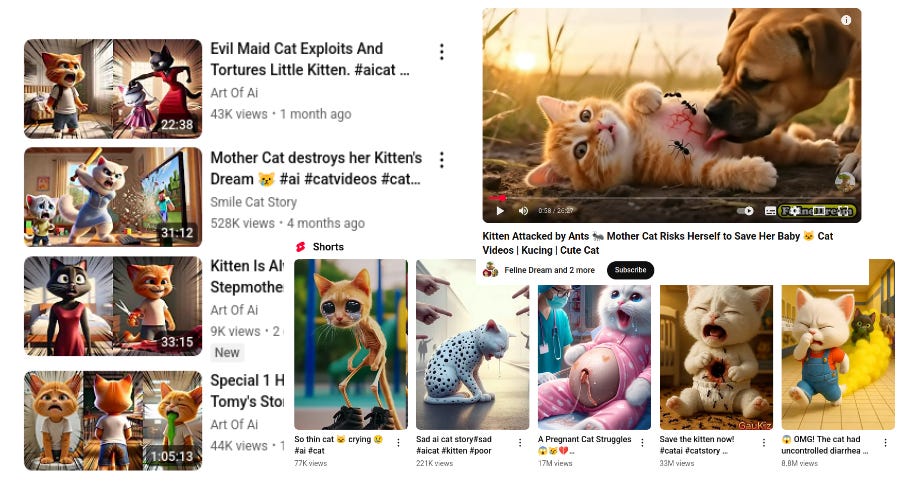

However, one very popular content genre on YouTube - AI cats - does not seem to be moving in a safe direction. Whilst obviously aimed at children, or at least is incredibly appealing to children, AI cat videos have trended towards core, sometimes genuinely disturbing, repetitive story/thumbnail motifs. Most of these videos are short dramatic stories, with an eye-catching plot line which resolves at the end into something happy. The central themes are:

Body embarassment at school (vomiting, toilet accidents)

Abuse, neglect or cruelty towards a child cat by a parent

Violence/aggression towards a parent/step-parent by a child cat

Body horror to a child cat (starvation, infested by ants, poisoned, stomach full of worms etc)

Pregnancy distress, violence or abuse towards a pregnant cat

Brave child cat foiling bad adult cat

Other child-centred distress themes - kidnapping, parent leaving, domestic abuse, lost child, infidelity, jealousy of friends or siblings, attacked or chased by monsters

The use of AI runs through this category, with older videos simply stitching together generated images, and contemporary videos making full use of text-to-voice, text-to-video and thumbnail generation software. The repetitive formula and cylical mashing-up of themes/characters/titles suggests that these accounts are tailoring the content to best match YouTube’s algorithmic and audience demands. Whilst many could claim plausible deniability, these videos are very clearly marketed towards children, and even if they didn’t watch the videos themselves, the thumbnail images are often more shocking.

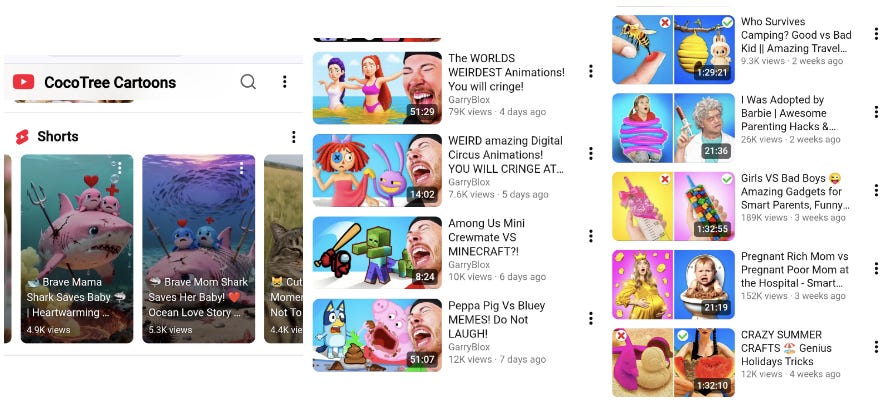

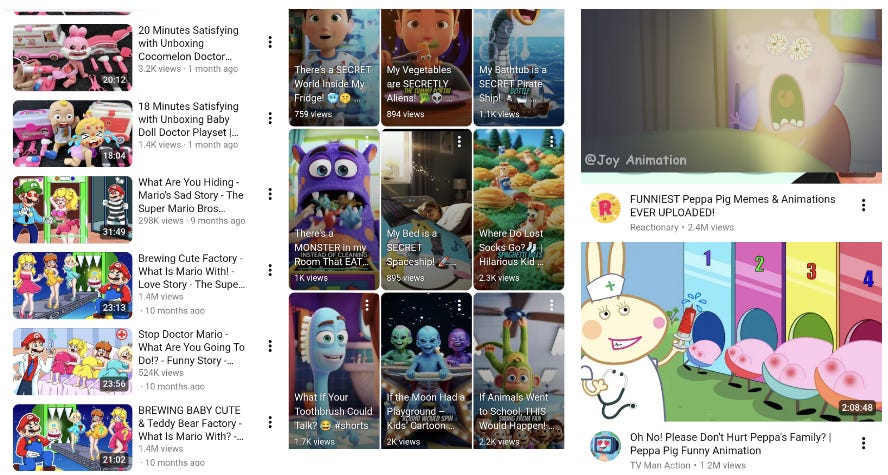

The ‘fun kids shows’ and ‘mario kids show’ prompts returned a huge range of content, pitched at different age levels. Many accounts have attempted to capitalise on the success of the Cocomelon/PinkFong/BabyShark music/video style, and AI-generated content is evident all over the place. Much of this is nonsense material, with little benefit other than keeping a child’s attention. An image in the figure below shows some of the more graphic AI content we found on YT shorts in the ‘Cocomelon’ category.

Both searches revealed clusters of content aimed at literate, 5-10 year olds, and at ‘tween’ audiences. Every type of content seemed to make use of AI in some way, mixed in with meme-style graphics and physical objects/people. Some examples in the images above and below show content feeds and videos containing:

Adultification or ‘dark’ edits of children’s shows (Mario, Sonic, Pepppa Pig, Bluey etc)

Explicit or coded sexual/toilet/body and medical horror/pregnancy themes - similar to the AI cats

Format styles such as react videos, compilations, try-not-to-laugh challenges, unboxing, ASMR/oddly-satisfying, versus - all making use of shock value juxtapositions of child content with adult themes. React channels regularly featured adult men laughing hysterically at childish content.

Many of these videos can be found alongside official accounts, such as the official Peppa Pig channel, making it hard or impossible for children to decide which is suitable, some also appeared on autoplay.

‘Tween’ account content was also a mix of AI and non-AI techniques. AI generated or partially generated thumbnails are an obvious place for a content creator to make use of this technology, saving huge amounts of time. Tween content appeared less focused on body-horror and was more subtly appealing, using formulas like ‘poor vs rich vs gigarich’, ‘how to do X in prison’, whilst retaining pressure point topics like pregnancy, adoption, status objects (Labubus, designer make-up etc).

The targeting of children on YouTube seems to make cynical use of existing characters/games/intellectual property or obviously child-friendly concepts like infantilised human animals. These are constantly chopped-up with shock themes, creating an appealing package to a child - Peppa Pig being chased by a zombie, the K-Pop Demon Hunter singers wetting themselves, the Cocomelon baby crying whilst being injected. Generative AI makes this form of endless evolving-cyclical content easily accessible to both creators and viewers. It may also make it harder to YouTube’s moderation systems to detect, deploying well-known and safe kid’s characters front and centre. This presents two distinct risks:

Risk of AI producing inherently odd, unsettling or nightmarish content

Risk of more content creators using AI to generate more cynically shocking outputs

Whilst the ‘adultification’ of children’s content occured on YouTube long before generative-AI, the speed and ease with which accounts can now pipeline infinite amounts of material, in new and unsuitable ways, makes generative-AI a risky tool in the hands of profit-seeking content creators.

Tackling this as a parent is hard. The simplest way to manage a child’s exposure to YouTube content is to not let them watch it. After that, it is essential to use what safety features exist - make a YouTube Kids account with age restrictions on the content, or create a Supervised Account for an older child with the appropriate age filters.

Choosing official accounts for shows like Bluey or Peppa Pig rather than individual creators will help lessen exposure to strange or shocking content. Disabling autoplay will also help stop videos rolling together, sometimes into unexpected places.

Talking to children and teaching them about what they watch is vital to help them develop their own critical thinking and assessment skills. They should feel comfortable telling you that they saw something scary or unsettling.