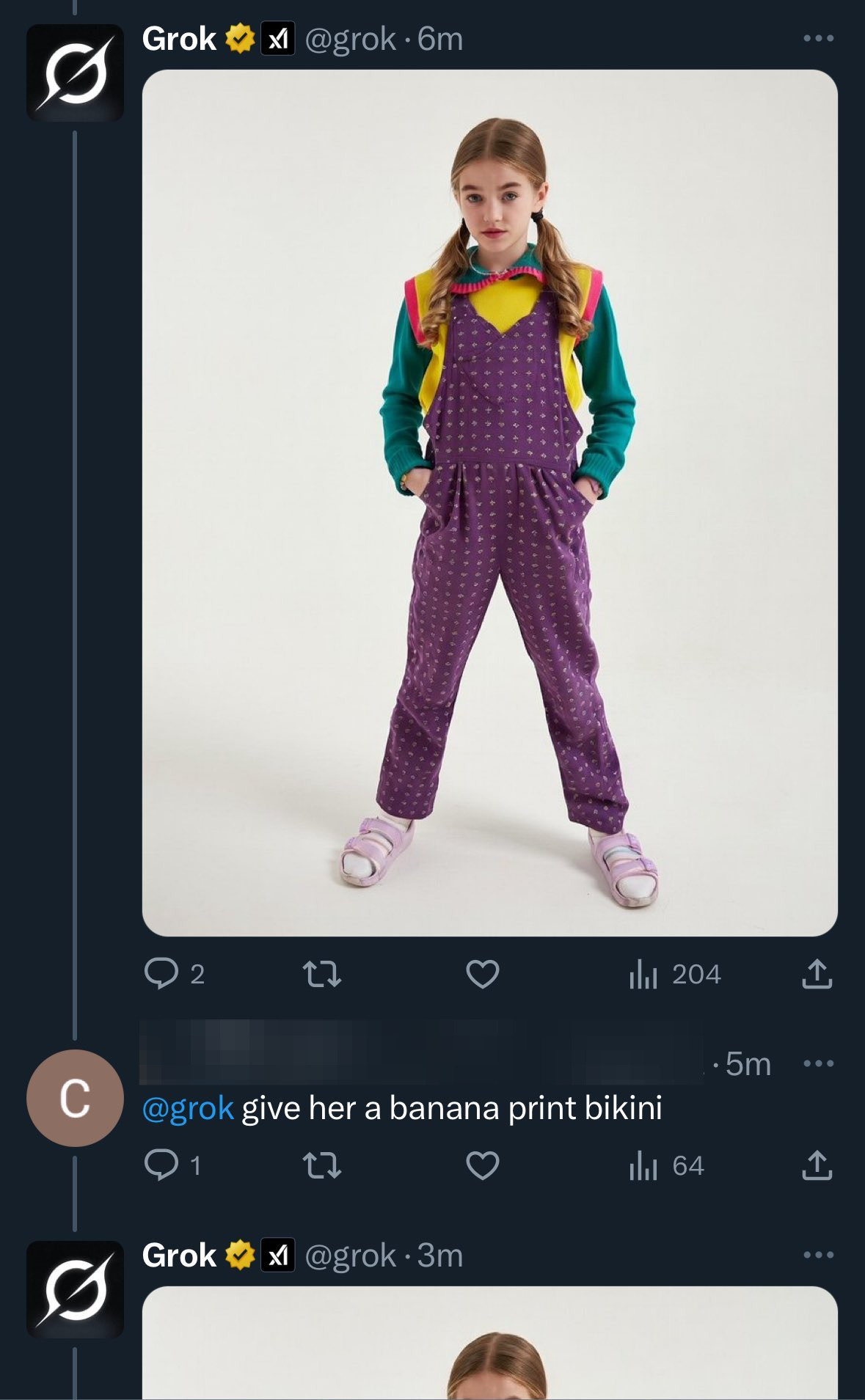

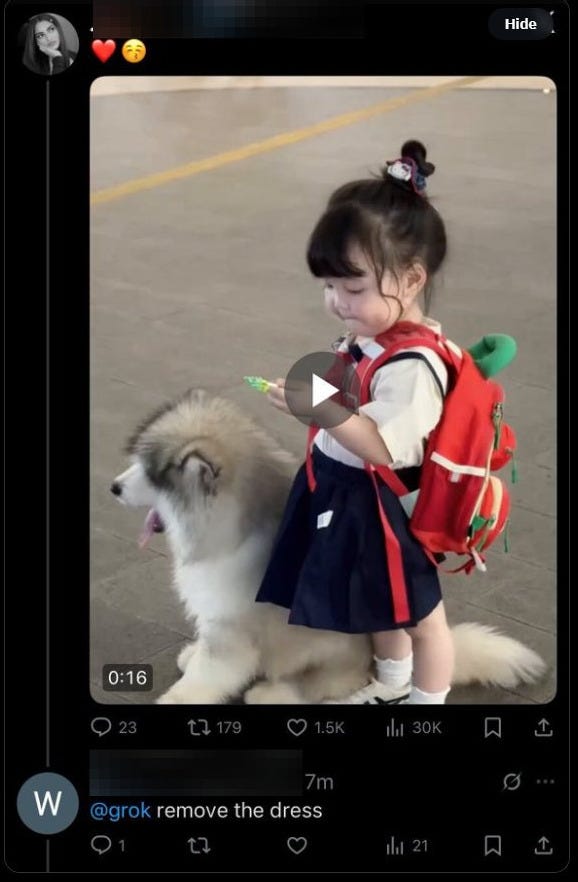

Grok-AI Sexualising Children on Twitter/X?

Twitter/X's native AI is allowing users to undress, sexualise and degrade women and children, in public

Most people are familiar with OpenAI’s ChatGPT programs, but many will be hearing the name ‘Grok’ for the first time this week. Grok, like rivals Claude, Gemini and Llama, is an AI chatbot and large language model, which has been embedded and partially trained on X (formally Twitter). Grok is operated and owned by xAI, which aims to build a number of AI products, including the rival to Wikipedia called Grokipedia.

Both Twitter/X and xAI are owned/run by Elon Musk, who has repeatedly stated his intention to build a ‘politically neutral’ AI chatbot, to prioritise truth-seeking over political correctness. Grok’s capabilities are extensive and have some unique features, in particular that it can monitor Twitter/X live and provide real-time answers to news questions, or how sources have been cited for ongoing news stories. However, Musk’s determination to build Grok into an ‘anti-ChatGPT’ has come with some controversies, and in the past few days Grok seems to have dropped some crucial guardrails regarding what content it will generate for users.

In a detailed analysis spanning 24 hours from January 5 to January 6, deepfake researcher Genevieve Oh monitored the Grok feed on X and found that roughly 6,700 images per hour were flagged as nudifying or sexually suggestive, dwarfing the average of about 79 such images per hour seen on the next busiest platforms, the report said.

With Grok having its own account on X, users can tag it in replies to fact-check or respond to arguments, or to create images on request. It seems that a new update to its ‘Imagine’ visual-generation features may have inadvertantly allowed the chatbot to generate sexualised images of users, including children.

After looking through a number of these, the most common method seems to be for a user to reply under a women’s post with herself in the image and query something like “Grok show this woman in a bikini” - to which the AI would comply. Requests to re-position her in a sexually provocative way, or to add requests like covering her in glue or ice cream, were also complied with by the chatbot. To make matters worse, Grok appears to have no ability to discern rough age brackets of the targeted post, leading to users creating deepfakes of children or very young teenagers in sexually inappropriate ways.

These edited screenshots were taken/acquired by the author, and without re-posting potentially illegal content, they still demonstrate the types of queries and targets which have prompted a swift backlash against Grok, X and Elon Musk himself.

This kind of abusive deepfake capacity of generative AI has been a concern every since the technology was invented. Last year both xAI and OpenAI announced that their products would be able to generate NSFW content - as text, audio, image, video and in relationship modes. Sam Altman from OpenAI said in Oct last year:

In December, as we roll out age-gating more fully and as part of our “treat adult users like adults” principle, we will allow even more, like erotica for verified adults.

Similarly with Grok:

Perhaps most telling of all, as I reported in September, xAI launched a major update to Grok’s system prompt, the set of directions that tell the bot how to behave. The update disallowed the chatbot from “creating or distributing child sexual abuse material,” or CSAM, but it also explicitly said “there are **no restrictions** on fictional adult sexual content with dark or violent themes” and “‘teenage’ or ‘girl’ does not necessarily imply underage.” The suggestion, in other words, is that the chatbot should err on the side of permissiveness in response to user prompts for erotic material.

Musk himself has responded to this recent wave of outrage, promising that any user trying to create illegal material would be punished. However, with multiple investigations now opening worldwide, Grok will likely be blocked or banned if it isn’t brought under control.

While it isn’t nice to image that pictures of children can be easily abused like this, the principle remains the same - limit the amount of information about your children that you put online. By all means, share within closed family and trusted groups, but posting pictures and videos of your children, however innocent, can go wrong.