Child Safety News: November 2025

Meta's adverts, AI image abuse, sextortion, the UK's growing online policing

The past month has seen the continued international increase of governmental attempts and calls for a huge change in how we use the internet: digital IDs, message scanning, online safety legislation, AI regulation, social media age restrictions, banning of VPNs and the removal of anonymisation. We at DigiShield Kids believe that the use of far-reaching, privacy ending tools, in order to catch predators or prevent access to inappropriate content, is unworkable and damaging, and we will write more on this soon.

Meta is earning a fortune on a deluge of fraudulent ads, documents show

Meta has been caught by Reuters, through a cache of unexamined documents, knowingly profiteering from scam and fraudulent adverts - around 15 billion per day. These included “fraudulent e-commerce and investment schemes, illegal online casinos, and the sale of banned medical products”. Meta is a popular platform with children, who may be exposed to such adverts, and are more likely to fall for scam content.

Police investigation leads to 28 years behind bars for Snapchat groomer

On Nov 11th Paul Lipscombe, 51, from Rothley in Leicestershire was sentenced to 28 years for 34 crimes against six girls, all aged between 12 and 15. He used multiple fake accounts on Snapchat to contact and groom the girls, before organising to meet them and ultimately assault them.

Along with these crimes, he was also charged with generating and distributing sexual images of children using AI - underscoring the complexities of these cases and the risks of emerging technologies.

1 in 5 UK parents know a child who’s been blackmailed online

The NSPCC this month released a new report: A collective concern: parent and carer views on the online blackmail of children and young people, based on survey data with 2,558 UK parents and carers. The report found that:

Around one in five parents and carers know and have supported a child who has been blackmailed online

Almost one in ten (9%) said that their own child had been blackmailed online

Despite this, two in five parents never speak to their children about the topic

Barriers given for not talking about online blackmail with children include fears of overreacting or scaring a child (32%), their child’s current mood or feelings (29%), and their child’s reluctance to talk about sensitive topics (25%).

Rani Govender, policy manager at the NSPCC said:

“These findings show the scale of online blackmail that is taking place across the country, yet tech companies continue to fall short in their duty to protect children…

…While we push for systematic change, it’s crucial that parents feel equipped to have these difficult conversations with their children. Knowing how to talk about online blackmail in an age-appropriate way and creating an environment where children feel safe to come forward without fear of judgement can make all the difference.”

AI child sexual abuse imagery is not a future risk – it is a current and accelerating crisis

Delegates at the recent Child Dignity in the Artificial Intelligence Era conference were shocked to hear from Internet Watch Foundation’s CEO Kerry Smith, that the scale of AI-generated child sexual abuse images was rapidly increasing year-on-year. Their analysis from 2024 demonstrated a 380% increase in AI-generated child abuse images, 98% of which depicted females.

Smith continued:

“We’re not just seeing synthetic imitations of mild or ambiguous content; we’re talking about highly violent, deeply abusive material.

“There is no evidence to support the claim that AI-generated imagery could be used as a “safe substitute” that might somehow reduce harm by giving offenders a non-contact outlet. Using AI child sexual abuse material reinforces harmful sexual fantasies and normalises abusive behaviour rather than preventing it.”

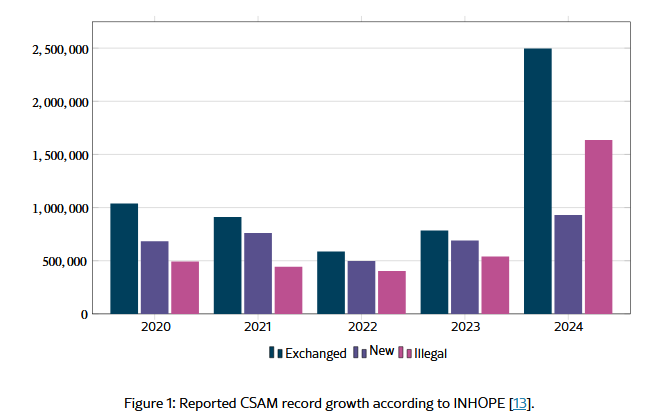

Just in Plain Sight: Unveiling CSAM Distribution Campaigns on the Clear Web

To reinforce the increasing threat of both real and AI child abuse images online, two researchers were able to demonstrate how this material has migrated from the dark web onto mainstream clear web social media platforms over the past few years. Organised networks of criminals use bots, social media campaigns and huge quantities of abusive images to drive new users towards increasingly secretive and anonymous communications - using Discord, Telegram and other private channels.

Ofcom urges social media platforms to combat abuse and limit online ‘pile-ons’

In another proposed expansion of the UK’s Online Safety Act, Ofcom has issued social media companies a number of ‘technically voluntary’ reccomendations to limit the amount and severity of online abuse women and girls recieve. These measures include:

tech companies enforce limits on the number of responses to posts on platforms such as X

platforms using a database of images to protect women and girls from the sharing of intimate images without the subject’s consent

the use of “hash-matching” technology, which allows platforms to take down an image that has been the subject of a complaint.

deploying prompts asking people to think twice before posting abusive content

imposing “time-outs” for people who repeatedly misuse a platform

preventing misogynistic users from earning a share of advertising revenue related to their posts

allowing users to quickly block or mute multiple accounts at once

Ofcom will be publishing a report in 2027 which assesses how well these companies have performed in adopting these guidelines and suggestions, with potential penalties and legislation changes for failures.

Ofcom is monitoring VPNs following Online Safety Act.

Ofcom has also admitted to TechRadar that it is using an unnamed third-party AI tool to track UK VPN usage in the wake of the Online Safety Act. VPN use has spiked across Britain, in part due to adults and children circumventing age-verification and identity enforcements.

Given that Baroness Lloyd has stated there are “no current plans to ban the use of VPNs”, she has also said that “nothing is off the table” when it comes to protecting children online. A VPN ban would not only make academic, commercial and governmental work extremely difficult, since they allow employees and workers to remotely access secure databases and tools, but it would also decimate online privacy and anonymity.

While using a third-party vendor isn’t surprising, the fact that Ofcom refuses to identify who it is raises concerns.

Ofcom has not responded to our follow-up request for additional information. That means there’s no way for the public to know whether the data provider is a company with a track record of protecting people’s privacy, or one known to use invasive surveillance techniques.

New analysis: 800,000 under-5s using social media

A new report from the Centre for Social Justice suggests that 37% of surveyed children aged between three and five years old use at least one social media platform per day. The researchers estimate this is around 800,000 children, many of whom are recommended by the World Health Organisation to have no screen time at all. Their analysis also showed:

Almost one in five (19 per cent) children aged three to five use social media independently

Rise of estimated 220,000 users under five in 2024 compared to previous year

40 per cent of children under 13 have a social media profile despite restrictions

One in four 8 to 9-year-olds who game online report interacting with unknown individuals

Growing evidence screen time causes increased anxiety and sleep disturbances